It is difficult to achieve multi-person VR walking in the same real space

Virtual technology, otherwise known as virtual reality (VR), uses computer simulations to generate a virtual three-dimensional space in which users may observe virtual objects in real time. As the user changes their position, a computer can perform complex calculations and display precise virtual world graphics to the user, creating an immersive experience. Research in the virtual reality field is focused on improving the experiences of users walking in a virtual setting.

A problem arises when multiple virtual reality users are moving in the same real space. The virtual space is rarely representative of the user’s real space, with the virtual space being typically much larger than the real space the user is able to occupy. When two or more users occupy the same real space, they face the chance of colliding with each other. A workaround to this problem is to separate the users into different rooms, such that they are able to meet in the same virtual space through a data network. Another possible solution is to let multiple users in the same room cooperate in avoiding collisions through avatars in virtual space; this method of handling multi-user movement in the same real space is currently a focus of industry research.

GCL proposes redirection smooth mapping method for industry problems

To address the discrepancies between real and virtual space, there are currently two popular methods. The first is the redirected walking (RDW) method, which incorporates subtle manipulations of virtual space that escape user perception and is based on the idea that the user’s vision is the main factor regulating their motion. However, a main drawback of RDW is that it may only be used for a single user.

The second method to bridge the gap between real and virtual space is through smooth assembly mapping (SAM), which remaps virtual space into the given real space in small patches. However, since this map does not use a unit scale, the mapped virtual space will be significantly distorted. The SAM method is optimized through an algorithm that measures the constraints in real space and the amount of distortion present and attempts to map the virtual space into the real space with the least distortion possible. SAM methods allow the user to physically move in a virtual space without interruption, but a significant disadvantage is the visual distortion present that diminishes the user’s virtual reality experience.

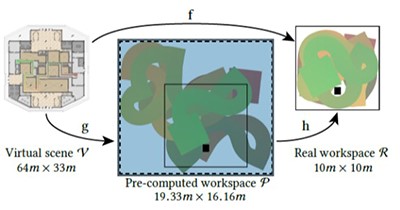

Analyzing the advantages and disadvantages of the two above methods, Mr. Liu Ligang’s team at the University of Science and Technology of China proposed a redirected smooth mapping (RSM) that incorporates the redirected walking method into a smooth assembly map, generating a virtual scene with smaller bends and low distance distortion. The REM method aims to establish a map f of virtual space SV→ real space SR, transforming each point (u, v) in SV to (x, y) in SR. The virtual space SV is first divided into k blocks, each of which is mapped individually. Then, the desired map f is generated in two parts. First, an intermediate working space SP is established and an intermediate map g: SV → SP is computed. Second, RDW is applied to SP to create h: SP → SR.

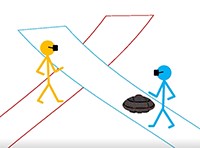

In order to minimize the risk of a collision between users, Mr. Liu’s team designed a system using virtual avatars. These dynamic avatars are generated in front of a user’s route when two users are at risk of collision. Users are reminded to stay clear of the avatar, thereby avoiding a collision between users.

NOKOV provides multi-user high-precision positioning solutions

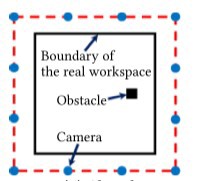

The University of Science and Technology of China used 12 NOKOV Mars 2H infrared optical motion capture cameras (represented by blue dots in the figure) in a multi-user virtual space movement and interaction experiment. These cameras captured the entire test site of 10m x 10m, which would then be mapped into a virtual space that measured 64m x 33m. A steel column was placed in the room as an obstacle. Three markers were placed on the user’s head; the average of the positions of the three points was used to represent the user’s position in the testing field.

The user’s position in SV was named the virtual position; their position in g(SV) was the redirection position; and their position in f(SV), obtained by the NOKOV infrared optical motion capture cameras, was the real position (corresponding to the blue line in the figure). The user was redirected from their real position f(SV) to the redirection position g(SV) by the RDW method. While the user was moving, the NOKOV motion capture system recorded the real position x of the user. Dynamic reverse mapping was then used to obtain the user’s virtual position y = f-1(x). Finally, the intermediate map g was used to map the virtual position y to the redirected position z = g(y) = g(f-1(x)), which corresponded to the location rendered to the user in the head-mounted display (HMD) helmet.

For the collision prevention algorithm, it is necessary to determine the relative positions of the two users in both real and virtual space and calculate where to direct each of them. Through the NOKOV motion capture device, two users A and B may be detected to be very close to each other in real space, whereas their redirected positions g(SV) were far apart. As the two users cannot see each other through their HMDs, an avatar may appear in user B’s display, indicating for user B to stay away from the avatar and thus avoiding a collision with user A.

In addition to the University of Science and Technology of China, NOKOV has also carried out in-depth cooperation in the direction of virtual reality with many domestic universities and industry-leading companies such as the School of Vehicles and Transportation of Tsinghua University and Vision Digital Technology Co., Ltd.